Autonomous Racecar Simulation

Most of the code for this project is available on a GitHub Repository.

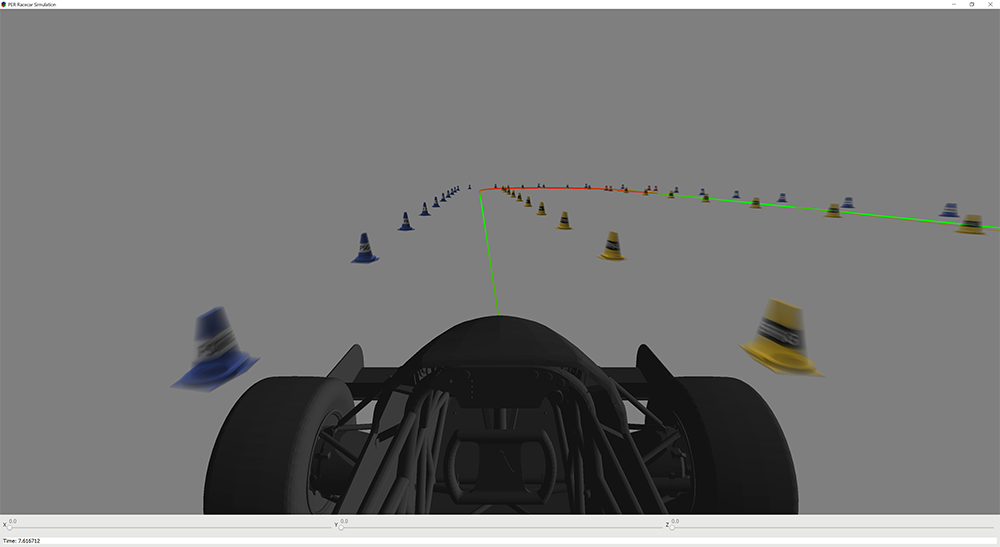

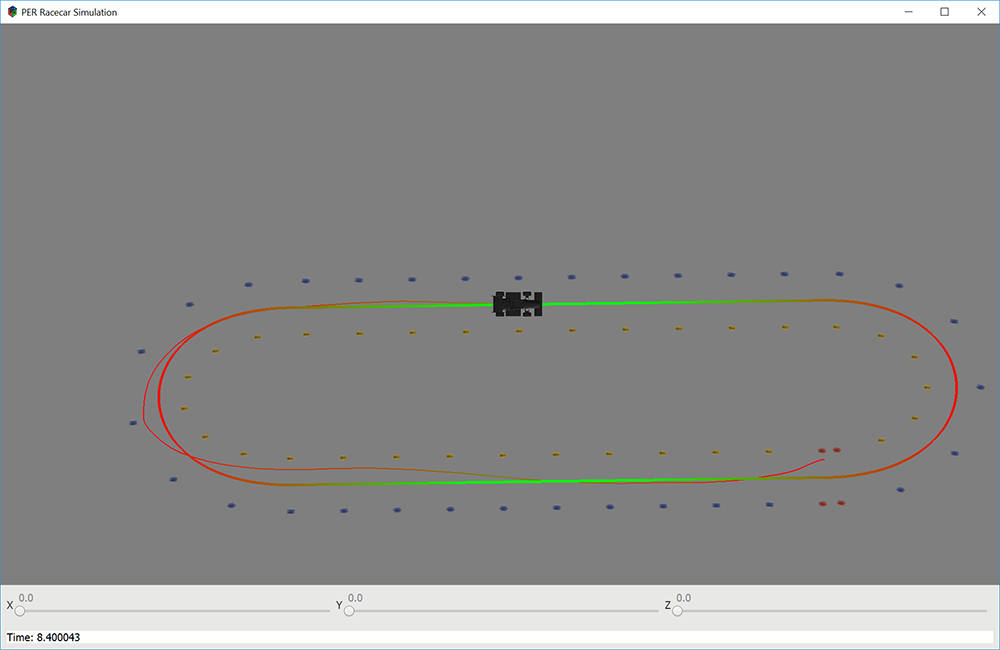

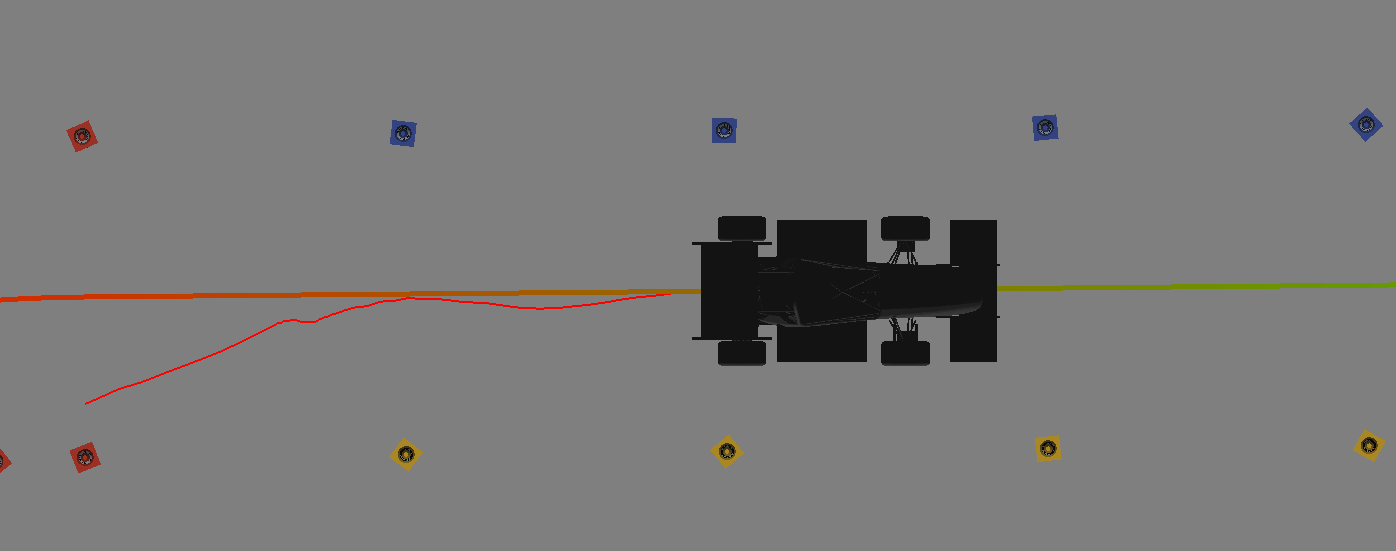

We've been thinking about making our next racecar autonomous on Penn Electric Racing for some time and when I found out that two of my classes had open ended final projects, I decided to take advantage of the opportunity to start working on the problem. I began by developing a simulation environment with a car physics model after deciding not to use Gazebo, because I wanted more flexibility and thought I'd learn more. This started with the graphics, including designing a cone model and texture to match the competition cones and convincing SolidWorks to export a simplified version of the actual racecar top assembly without crashing. I implemented motion blur in OpenGL using transparency to blend, because it was good enough and I didn't have time to implement a better method. This OpenGL context is within a gtkmm window that makes it possible to more add additional UI elements and this is all compiled within a cmake build system based on what PER uses.

I started experimenting with different physics models until I had something that seemed to match the dynamics of the actual racecar. It works by computing the velocity of the tires relative to the ground and using the torque request for the wheel (we have 4 wheel drive) to determine the force on each wheel. The four wheels are combined to find the net force and moment on the car and the simulation uses RK4 integration to update the racecar state over time.

Once the physics worked well, I started developing a model predictive controller for my Control and Optimization with Applications in Robotics (MEAM517) project. The first step was using gradient descent to find the nominal control inputs to make the racecar follow the path. This could already mostly follow the path, but the control inputs update much more slowly than the dynamics and therefore errors accumulate over time. In hindsight I should have also added some form of PID controller on top of this to improve accuracy. Instead, I went straight to implementing a model predictive control (MPC) algorithm to correct for the error, because that was the focus of the project. MPC involves simulating ahead with preliminary control inputs and solving a quadratic optimization problem to find adjustments to those control inputs to minimize trajectory error. After some debugging, I got this to work reasonably well, especially at lower speeds further from the traction limit. However, it would get stuck on the second corner of the oval course, likely because of an angle wrap around bug I didn't have time to finish debugging. Even with that fixed, I think a PID controller would make it perform better. More details are documented in the final report I wrote.

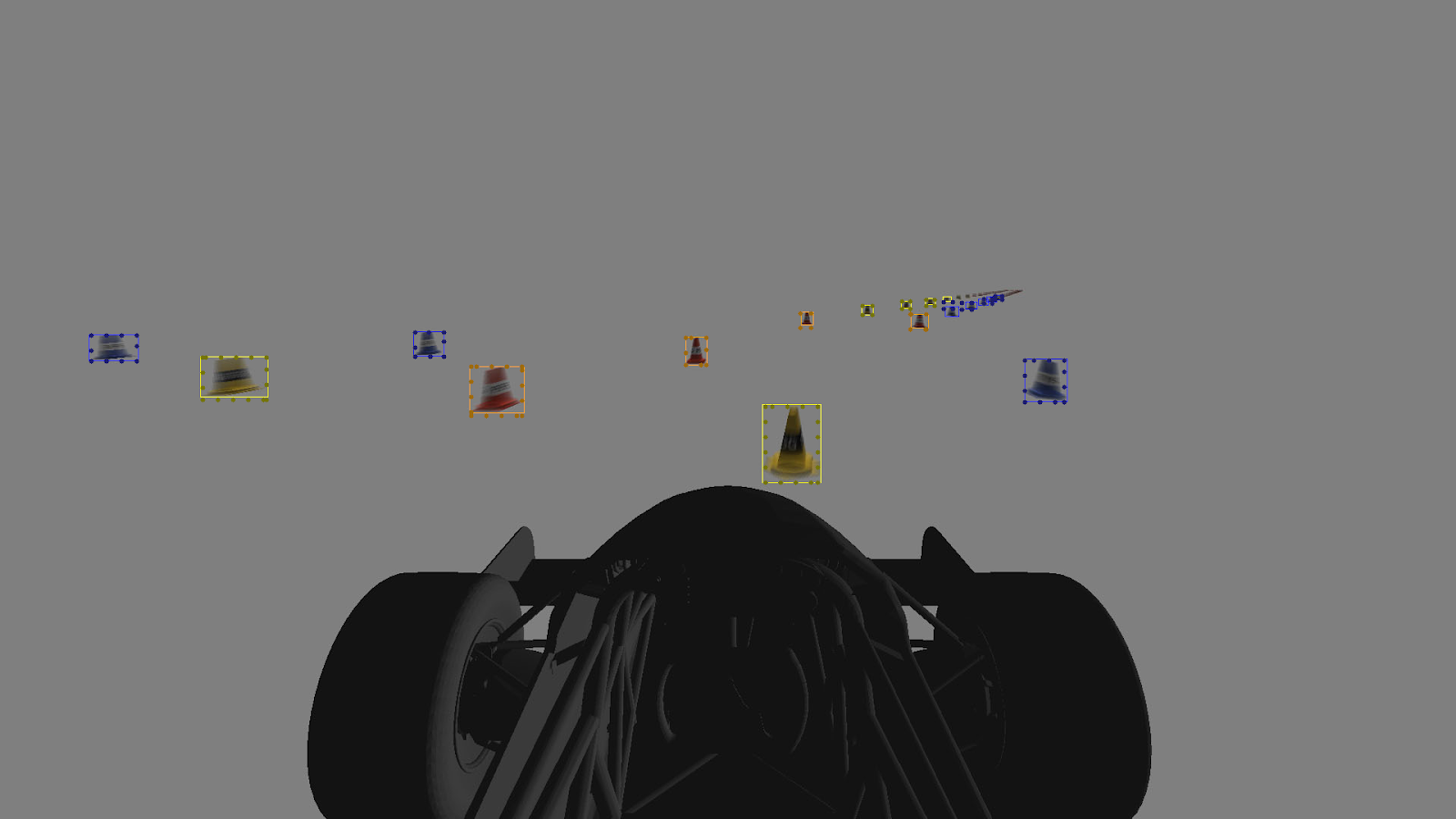

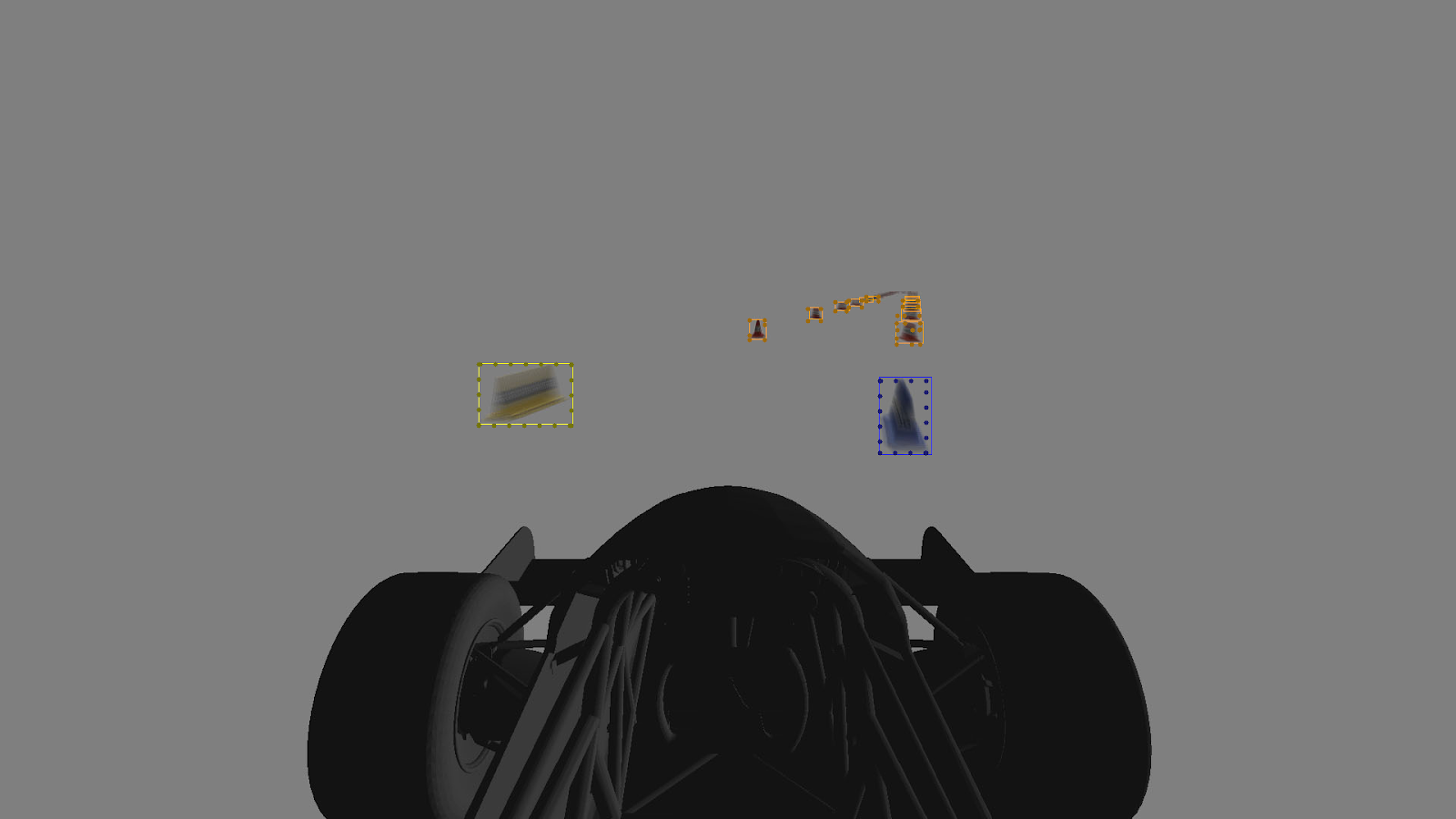

I also used the simulation for my applied machine learning (CIS519) final project, which I worked on with Raphael. I drove the car around and saved the images for into a few testing and trainig dataset. We trained a neural network (YOLOv3) to recognize the cones in the images, using bounding box labels generated by the simulation. After a week of training, it could produce bounding boxes that nearly matched the ground truth. It was also very good at differentiating different cone types. Unfortunately the network takes too long to evaluate to be used in real time, but it still served as a good baseline and proof of concept.

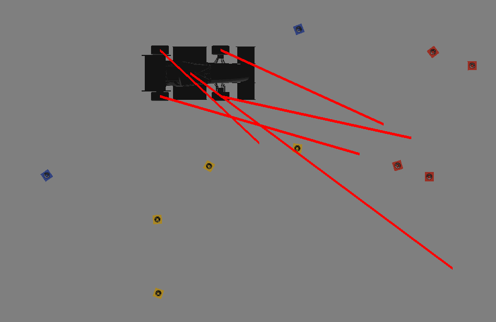

A second neural network then uses this data to determine the cone positions in 3D space. This network takes inputs of both the bounding box output by the first neural network and extra features based on the car's velocity and angular velocity data. It then uses these to output the cone position. The velocity data allows it to account for the motion blur and without it, the average error is several times higher. This was accurate within an average of a little over a meter and it was actually more accurate when run using the network labelled data than the ground truth, due to a combination of there being fewer far away cones and the fact that the boxes were better representative of where the cone was. This approach definitely seems to be a good way of dealing with motion blur and will likely be used for the actual racecar once we find a fast enough way to locate the cones in an image.